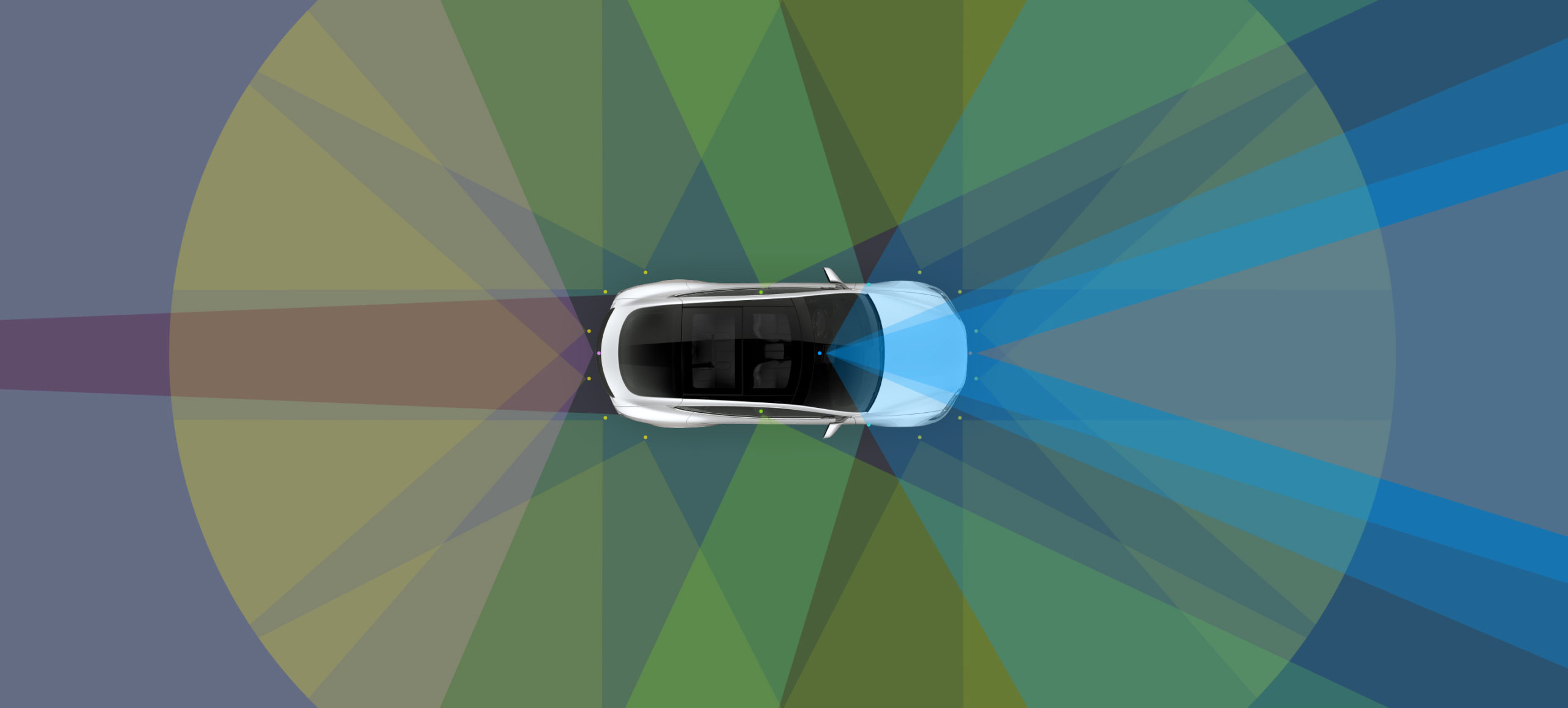

Neither lidar nor now radar, the vision technology Tesla is working on today, is called upon to perform tasks unthinkable for a camera system not long ago. While many manufacturers fill their vehicles with multiple sensors, those in Palo Alto seem to be in a rush to reduce components as much as possible.

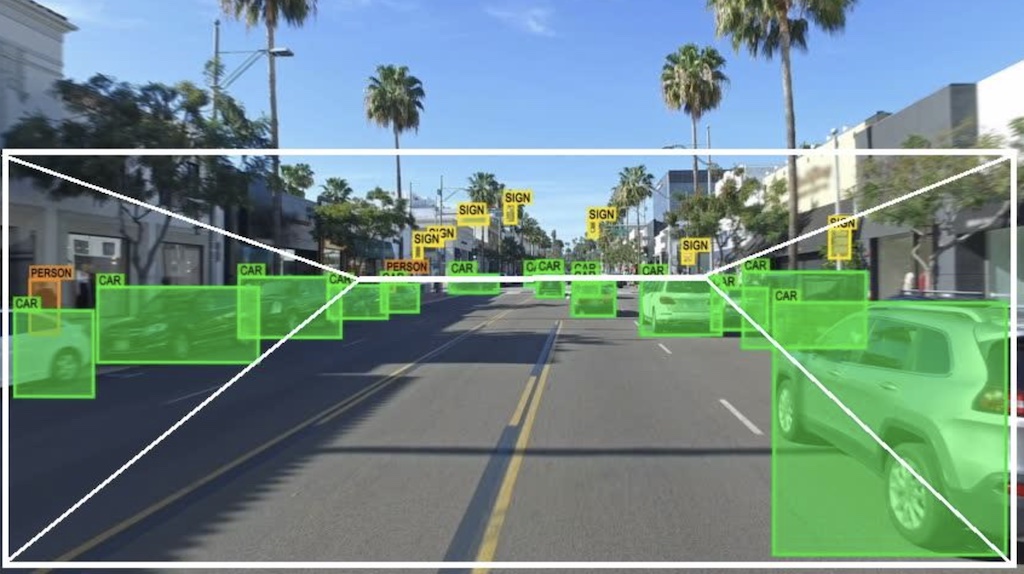

Last week Tesla received the patent for which they will be able to “estimate the properties of objects using data from visual images.” This confirms the American brand’s efforts into its image recognition systems and the electric car environment itself in its effort to achieve total autonomous driving.

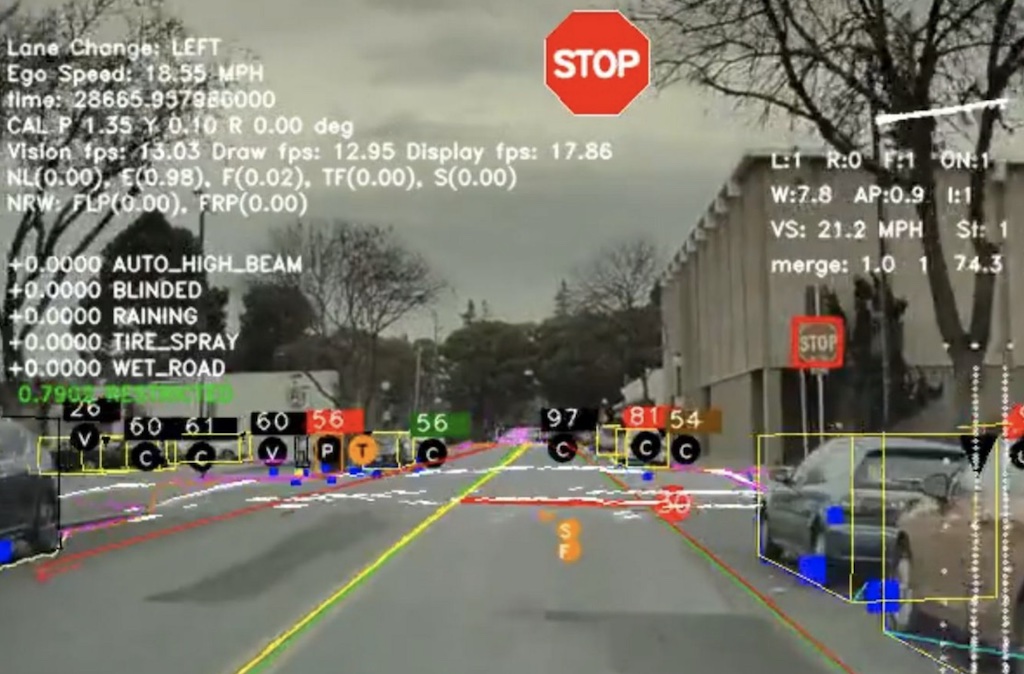

Tesla’s patent explains its operation, which will be implemented through two neural networks capable of measuring object’s distances using only data from the images.

The first neural network determines the distance of objects from images captured by cameras around a vehicle. When the second neural network kicks in, training material is created to annotate images for the first neural network.

Elon Musk himself has even suggested that Tesla’s struggle to achieve the full and pure vision will tend as a consequence that at any given time, it will not even be necessary to use radar sensors in the future.

Tesla has always been characterized by eliminating elements that seemed essential in traditional automobiles, such as the ignition key or button or other elements that facilitate life on board and the comfort of using the vehicle.

The Palo Alto brand claims that it is necessary to find the correct number of sensors to put in an autonomous vehicle without limiting the amount of data it can capture and process.

For this reason, the visual elements are decisive to save costs, such as those involved in having lidar systems, radar, ultrasound, and other redundant elements, which imply an overload of the system that must process all the data and pose bandwidth problems of the automobile system.

For Tesla, the reduction of elements is necessary to reduce the complexity and costs of each electric car; for this reason “as the number and types of sensors increase, so does the complexity and cost of the system.”

According to the brand, “emitting distance sensors like lidar are often expensive to fit into a mass-production vehicle. Also, each additional sensor increases the input bandwidth requirements for the autonomous driving system.”

Against this, Tesla indicates that “there is a need to find the sensors optimal configuration in a vehicle. The design should limit the total number of sensors without specifying the amount and type of data captured to describe the surrounding environment and safely control the car accurately.”